Web scraping has become an indispensable tool for extracting valuable data from websites, enabling businesses and individuals to gain insights, automate processes, and make informed decisions. However, the practice of web scraping is not without its challenges and ethical considerations. To ensure the integrity of data collection and foster responsible practices, it is crucial to adhere to a set of web scraping best practices.

In this article, we will delve into the fundamental principles that guide ethical and efficient web scraping, covering legal considerations, planning and preparation, scraping techniques and tools, data extraction and parsing, anti-scraping measures, performance optimization, error handling, data privacy and security, documentation and maintenance, as well as the importance of ethics and social responsibility. By following these best practices, you can navigate the complex landscape of web scraping with confidence and integrity.

Understanding Legal and Ethical Considerations

In the vast expanse of the internet, a treasure trove of data awaits those who dare to explore. Web scraping, the process of extracting information from websites, has emerged as a powerful means to unlock this digital wealth. But before embarking on a scraping adventure, it is essential to navigate the legal and ethical landscapes that surround this practice.

So, what is web scraping? At its core, web scraping involves automated data extraction from websites. It enables you to gather information ranging from product prices and customer reviews to financial data and social media trends. With such vast possibilities, it’s no wonder that web scraping has garnered attention and raised questions about its legality and ethical implications.

When it comes to legality, the key principle to bear in mind is respecting website terms of service. Websites often have terms and conditions in place that outline the permitted use of their content. While some websites explicitly prohibit scraping, others may impose restrictions or require consent. It is crucial to familiarize yourself with these terms and ensure compliance to avoid any legal repercussions.

Additionally, copyright laws come into play when scraping copyrighted material. Intellectual property rights protect original content, such as articles, images, and videos. Unless you have explicit permission or the content falls under fair use provisions, scraping copyrighted material could infringe upon these rights. Understanding the nuances of copyright law is paramount to stay on the right side of legal boundaries.

Moreover, obtaining proper consent for scraping is an ethical consideration that should not be overlooked. Even if scraping is technically allowed by the website’s terms of service, it is essential to ensure that the data owner or website owner has provided explicit consent for the scraping activities. Respecting the rights and intentions of website owners fosters a fair and ethical ecosystem for data collection.

By understanding and adhering to legal and ethical considerations, you can embark on your web scraping journey with confidence, knowing that you are upholding the principles of integrity and responsible data acquisition.

Planning and Preparation for Web Scraping

Imagine setting out on an adventure without a map or a destination in mind. Chances are, you’d end up lost and disoriented. The same holds true for web scraping. Before diving headfirst into the vast ocean of online data, proper planning and preparation are key to ensuring a successful and focused scraping endeavor.

The first step in this journey is defining the scraping scope and objectives. Clearly identify what data you need, the purpose behind collecting it, and how it aligns with your goals. Whether it’s market research, competitive analysis, or extracting specific information, a well-defined scope will guide your scraping efforts and yield meaningful results.

Next, identify your target websites and data sources. Determine which websites contain the information you seek and evaluate their suitability for scraping. Consider factors such as website structure, accessibility, and data availability. This step not only helps you narrow down your focus but also ensures that you’re scraping from reliable and relevant sources.

Setting up a dedicated scraping environment is another crucial aspect of planning and preparation. Creating a separate and controlled environment for your scraping activities helps mitigate potential risks and ensures smooth operations. It allows you to test and refine your scraping code without impacting live websites or risking accidental damage. Consider using virtual environments or containers to isolate your scraping setup from other systems and applications.

Equipped with a clear scope, identified data sources, and a dedicated environment, you’re now ready to select the most suitable scraping techniques and tools. There are various approaches to web scraping, such as HTML parsing, utilizing APIs, or employing specialized scraping libraries and frameworks. Each method has its strengths and limitations, so it’s important to choose the approach that aligns with your scraping objectives and the structure of the target websites.

As you venture deeper into the realm of web scraping, you’ll encounter dynamic content and JavaScript-based websites that present unique challenges. These websites often load data dynamically, requiring additional steps to access and extract the desired information. Familiarize yourself with techniques like rendering JavaScript, using headless browsers, or interacting with API endpoints to effectively scrape such dynamic content.

By diligently planning and preparing for your scraping endeavor, you lay a solid foundation for success. A clear scope, defined data sources, a controlled environment, and appropriate scraping techniques will empower you to navigate the web’s intricate landscape with precision and efficiency.

Web Scraping Techniques and Tools

In the world of web scraping, having the right techniques and tools at your disposal is like possessing a set of master keys that can unlock a wealth of information. From HTML parsing to utilizing APIs, there are various approaches to extracting data from websites. Understanding these techniques and selecting the appropriate tools can make all the difference in the success of your scraping endeavors.

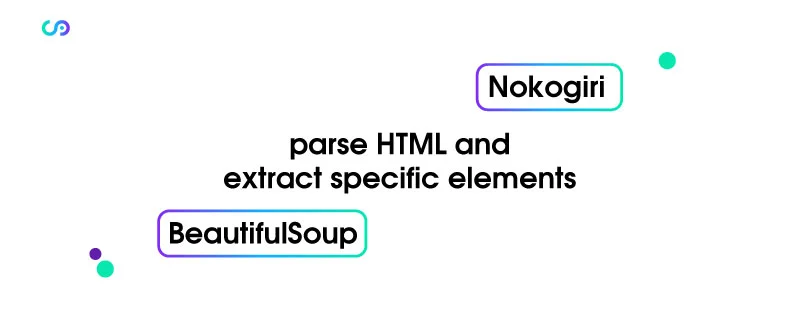

One of the most common techniques in web scraping is HTML parsing. HTML, the backbone of web pages, contains the structured information you’re after. By parsing the HTML code, you can navigate through the elements and extract the desired data. Libraries like BeautifulSoup in Python or Nokogiri in Ruby provide intuitive ways to parse HTML and extract specific elements, giving you the power to scrape websites effectively.

Another approach to web scraping involves leveraging APIs (Application Programming Interfaces). Many websites offer APIs that allow developers to access and retrieve data in a structured and standardized format. Using APIs not only simplifies the scraping process but also ensures that you’re accessing data with the website owner’s consent. Familiarize yourself with the API documentation provided by websites to understand how to make requests, handle authentication, and extract the desired data.

When it comes to tools, there is an array of scraping libraries and frameworks available to make your life easier. These tools provide pre-built functions and utilities that handle common scraping tasks, allowing you to focus on extracting and processing data. Popular libraries like Scrapy, Puppeteer, or Selenium offer a wealth of features, including handling cookies, interacting with forms, and simulating user behavior, making complex scraping tasks more manageable.

It’s worth mentioning that scraping dynamic content and JavaScript-based websites requires special attention. Many modern websites rely heavily on JavaScript to load and display data dynamically. In such cases, traditional scraping techniques may fall short. However, with the rise of headless browsers and tools like Puppeteer and Selenium, you can render JavaScript and interact with dynamic content programmatically, enabling you to scrape data from even the most interactive websites.

When selecting the right scraping technique and tools, consider the complexity of the websites you’re targeting, the scale of your scraping project, and the programming language you’re comfortable with. Assess the trade-offs between simplicity, flexibility, and performance to make an informed choice.

Keep in mind that proxies also play a vital role in enhancing your scraping capabilities and ensuring a smooth and uninterrupted scraping experience. Proxies act as intermediaries between your scraping code and the target website, masking your IP address and providing anonymity. By rotating through a pool of proxies, you can avoid IP blocking, bypass rate limits, and prevent your scraping activities from being traced back to your original IP. Proxies also allow you to scrape websites that have geo-restrictions, granting you access to region-specific data. Additionally, proxies help distribute scraping requests across multiple IP addresses, reducing the risk of being flagged as a bot and enhancing the scalability of your scraping operations. It’s important to choose reliable proxy providers that offer a diverse range of IP addresses and ensure high uptime and performance. Incorporating proxies into your web scraping toolkit empowers you with the ability to scrape more efficiently, overcome restrictions, and maintain a low profile while harvesting valuable data from the web.

With a deep understanding of web scraping techniques and a toolbox filled with powerful tools, you can unleash the full potential of web data. Whether you’re scraping structured HTML, utilizing APIs, or conquering dynamic websites, these techniques and tools empower you to navigate the vast landscape of the web and extract valuable insights with finesse and efficiency.

Web Scraping Performance and Efficiency Optimization

In the world of web scraping, time is of the essence. The ability to extract data quickly and efficiently can mean the difference between staying ahead of the competition or falling behind. That’s why optimizing the performance and efficiency of your scraping operations is crucial for maximizing productivity and minimizing unnecessary delays.

One essential aspect of performance optimization is implementing rate limits and delays in your scraping code. Respectful scraping involves being mindful of the server resources of the target websites. By incorporating appropriate delays between requests and adhering to rate limits specified by the website, you can avoid overloading servers and potential IP blocks. Balancing the speed of your scraping with responsible behavior ensures a harmonious scraping experience for both you and the website owners.

Caching mechanisms are also powerful tools for improving efficiency. When scraping websites, it’s common to encounter redundant data or data that doesn’t change frequently. By implementing caching, you can store previously scraped data and retrieve it when needed, reducing redundant requests and saving precious time and resources. This not only speeds up your scraping process but also minimizes the burden on the target website’s servers.

Optimizing your code and algorithms is another key aspect of performance improvement. Well-structured and efficient code can significantly enhance the speed of your scraping operations. Techniques such as utilizing asynchronous programming or multiprocessing can help you scrape multiple websites concurrently, leveraging the power of parallelism. Analyze your code for bottlenecks, eliminate unnecessary operations, and seek ways to optimize resource usage to achieve faster and more efficient scraping.

Furthermore, leveraging data compression techniques can greatly enhance the efficiency of data transfer during scraping. Compressing the data being sent and received can significantly reduce bandwidth usage, leading to faster scraping and reduced server load. Popular compression algorithms like Gzip or Deflate can be employed to minimize the size of HTTP responses, improving the overall efficiency of your scraping process.

When optimizing performance, don’t overlook the importance of monitoring and logging. Implementing a robust logging system allows you to track the progress of your scraping operations, identify errors or issues, and make informed adjustments. Monitoring tools can help you keep an eye on response times, server status, and other relevant metrics, ensuring that your scraping efforts remain on track and efficient.

By prioritizing performance and efficiency optimization in your web scraping endeavors, you can harness the full potential of your scraping code and unlock data at an unprecedented pace. Whether it’s implementing rate limits, utilizing caching mechanisms, optimizing code and algorithms, or employing data compression techniques, every optimization step you take brings you closer to a seamless and efficient web scraping experience.

Handling Anti-Scraping Measures

In the cat-and-mouse game of web scraping, websites often employ various anti-scraping measures to protect their data and prevent automated bots from accessing their content. Understanding and effectively handling these measures is crucial to ensure a seamless scraping experience while maintaining a respectful and ethical approach.

One common anti-scraping technique is the implementation of CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) challenges. CAPTCHAs are designed to differentiate between humans and bots by presenting tests that are easy for humans to solve but difficult for automated scripts. When faced with CAPTCHAs, consider implementing solutions such as CAPTCHA-solving services or utilizing headless browsers like Puppeteer or Selenium, which can interact with CAPTCHA prompts and automate the solving process.

Another challenge you may encounter is IP blocking, where websites detect and block IP addresses associated with excessive scraping activities. To bypass IP blocks, rotating your IP addresses is an effective approach. This can be achieved through the use of proxy servers, which act as intermediaries between your scraping code and the target website, allowing you to scrape with different IP addresses and avoid detection.

Websites may also employ various bot detection mechanisms to identify scraping activities. To bypass these measures, implement anti-bot detection bypass methods. This can involve mimicking human-like behavior by simulating mouse movements, randomizing scraping intervals, or even utilizing machine learning techniques to train models that mimic user behavior. By emulating human browsing patterns, you can lower the chances of being detected as a scraping bot.

It’s important to stay updated on evolving anti-scraping techniques and adapt your scraping strategies accordingly. Websites may implement sophisticated measures beyond CAPTCHAs and IP blocking, such as behavior analysis, cookie tracking, or JavaScript challenges. By keeping an eye on industry developments, actively engaging with scraping communities, and participating in forums, you can stay ahead of the curve and discover innovative solutions to handle emerging anti-scraping measures.

Remember, while it’s essential to overcome anti-scraping measures, it’s equally important to respect the website’s policies and terms of service. Implementing techniques to bypass anti-scraping measures should be done responsibly and in a manner that does not disrupt or harm the website’s functionality. Strive to strike a balance between gathering valuable data and respecting the website owner’s rights and interests.

By understanding common anti-scraping techniques, implementing anti-bot detection bypass methods, and employing strategies like rotating IP addresses, you can navigate the anti-scraping landscape with finesse and ensure the continuity of your scraping operations.

Web Scraping Documentation and Maintenance

Scraping project stands. Create comprehensive documentation that outlines the purpose, scope, and methodologies of your scraping project. Include information on the websites being scraped, the data being extracted, and any specific requirements or limitations. Clear and concise documentation not only aids in understanding your scraping code but also serves as a reference for future enhancements or collaborations.

Equally important is creating clear and concise code documentation. Commenting your code and providing explanations for complex algorithms or functions not only helps you understand your own code but also assists others who may need to maintain or modify it. Well-documented code is a gift to your future self and fellow developers, ensuring that the scraping project remains understandable and maintainable over time.

Regularly updating and maintaining your scraping codebase is vital to keeping it running smoothly and adapting to changes in the target websites. Websites often undergo modifications, which may impact the structure of the data or the scraping techniques employed. By staying vigilant and regularly reviewing and updating your scraping code, you can promptly address any issues arising from website changes, ensuring uninterrupted data extraction.

In addition to code maintenance, it’s essential to review and update your scraping infrastructure. Keep an eye on changes in technologies, libraries, and frameworks that you’re using. Regularly update them to newer versions, ensuring compatibility, improved performance, and security. Staying up-to-date with the latest developments in the web scraping landscape helps you stay ahead of potential challenges and leverage new features or optimizations.

Implementing a version control system, such as Git, is another valuable practice for managing your scraping codebase. Version control enables you to track changes, revert to previous versions, collaborate with others, and maintain a well-documented history of your scraping project. It provides a safety net and promotes collaborative development, allowing you to experiment and make changes confidently while having the ability to roll back if needed.

Remember, documentation and maintenance are not one-time tasks but ongoing commitments. Treat your scraping project as a living entity that requires care and attention. Regularly review and update your documentation, perform maintenance checks, and embrace a mindset of continuous improvement. By cultivating a culture of documentation and maintenance, you ensure that your scraping endeavors remain agile, adaptable, and poised for success in the ever-evolving landscape of the web.

Conclusion

Mastering web scraping best practices and incorporating the invaluable tips we’ve explored throughout this article is the key to unlocking the vast potential of web data while maintaining ethical and responsible scraping practices. By understanding the legal and ethical considerations, planning and preparing meticulously, utilizing effective scraping techniques and tools, optimizing performance and efficiency, prioritizing documentation and maintenance, and embracing the principles of data privacy and security, you can embark on a successful and rewarding web scraping journey. Remember, continuous learning, adaptation, and a commitment to responsible scraping practices are the cornerstones of harnessing the power of web scraping effectively. So, armed with these best practices and tips, venture forth confidently into the digital realm, knowing that you possess the knowledge and tools to navigate the complexities of the web and extract valuable insights with integrity and excellence.

FAQs

Is web scraping legal?

Web scraping itself is not illegal, but it is important to follow legal and ethical guidelines. Respect website terms of service, adhere to copyright laws, and obtain proper consent for scraping to ensure compliance.

How do I handle anti-scraping measures?

To handle anti-scraping measures, familiarize yourself with common techniques like CAPTCHA or IP blocking, implement anti-bot detection bypass methods, and employ strategies such as rotating user agents and IP addresses.

How can I optimize the performance of my web scraping code?

Optimize performance by implementing rate limits and delays, utilizing caching mechanisms, optimizing code and algorithms, and leveraging data compression techniques to achieve faster and more efficient scraping operations.