Web scraping, also known as data scraping or web harvesting, is a technique used to extract large amounts of data from websites. It involves the automated retrieval of information from web pages and the conversion of that data into a structured format, such as a spreadsheet or a database.

Python has become one of the most popular programming languages for web scraping due to its ease of use, flexibility, and wide range of libraries and frameworks available. In fact, Python is often the language of choice for many web scraping enthusiasts and professionals alike.

In this article, we will explore the importance of web scraping and the advantages of using Python for web scraping. We will also provide an overview of the different tools and techniques available for web scraping with Python, and provide some examples of how Python can be used for web scraping in various contexts. Whether you are a beginner or an experienced developer, this guide will provide you with valuable insights into the world of web scraping with Python.

Getting Started with Web Scraping in Python

Web scraping has become increasingly popular over the years due to its ability to extract data from websites automatically. Python, being one of the most popular programming languages, has a variety of libraries and tools that can be used to make web scraping with Python a breeze.

In this section, we will cover the basics of getting started with web scraping in Python, including installing Python and the necessary libraries, setting up the development environment, and the basics of HTML, CSS, and XPath.

Installing Python and the necessary libraries

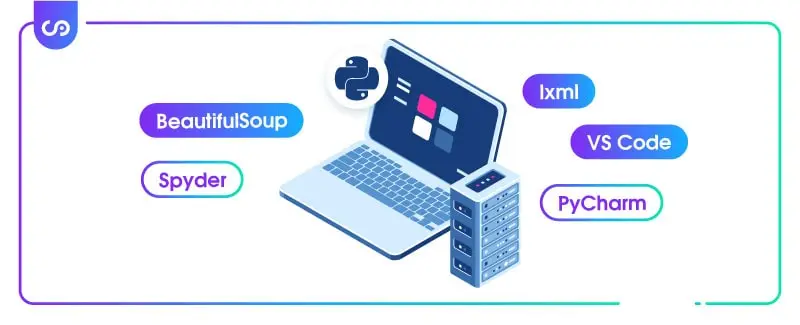

Before we start, we need to install Python and the necessary libraries. Python can be downloaded and installed from the official Python website (https://www.python.org/downloads/). Once installed, we need to install the libraries that we will use for web scraping. Some of the popular libraries used for web scraping in Python are BeautifulSoup, lxml, and requests. We can install these libraries using pip, which is the package installer for Python.

Setting up the development environment

Once we have Python and the necessary libraries installed, we need to set up our development environment. There are several Integrated Development Environments (IDEs) available for Python, including PyCharm, Spyder, and VS Code. We can choose any IDE that we are comfortable with. Once we have an IDE installed, we can create a new project and start coding.

Basics of HTML, CSS, and XPath

HTML (HyperText Markup Language) is the standard markup language used to create web pages. CSS (Cascading Style Sheets) is a language used to describe the presentation of a document written in HTML. XPath is a query language used to navigate through XML documents. Understanding the basics of HTML, CSS, and XPath is essential for web scraping in Python. In the next section, we will cover the basics of HTML, CSS, and XPath that are necessary for web scraping with Python.

Web Scraping with Python

Python provides several libraries for web scraping, but one of the most popular and user-friendly is BeautifulSoup. Here, we’ll go over the basics of using BeautifulSoup to extract data from web pages.

Using BeautifulSoup for Web Scraping: The first step in web scraping with BeautifulSoup is to install the library. You can do this using pip, the package installer for Python. Once you have installed the library, you can start using it to extract data from web pages.

Extracting Data from HTML Pages

To extract data from an HTML page, you first need to download the page’s source code. This can be done using the requests library, which allows you to send HTTP requests using Python. Once you have the source code, you can pass it to BeautifulSoup, which will parse the HTML and allow you to extract the data you need.

Parsing XML Documents

In addition to HTML, BeautifulSoup can also be used to parse XML documents. XML is a markup language used for structuring and storing data. The process for parsing an XML document is similar to that for HTML. You start by downloading the XML source code and passing it to BeautifulSoup. From there, you can extract the data you need.

Scraping Data from JSON Files

JSON is a lightweight data interchange format used for storing and transmitting data between servers and web applications. Many web APIs return data in JSON format, making it a popular data source for web scraping. To extract data from a JSON file, you first need to download the file using the requests library. Once you have the file, you can use the json library in Python to parse the JSON data and extract the information you need.

In this section, we have covered the basics of web scraping with Python using the BeautifulSoup library. By following the steps outlined above, you can extract data from HTML pages, parse XML documents, and scrape data from JSON files.

Advanced Web Scraping Techniques with Python

Web scraping with Python can be taken to the next level with advanced techniques that allow for more complex scraping tasks. In this section, we will discuss some advanced web scraping techniques with Python.

- Scraping Dynamic Websites with Selenium Many modern websites use dynamic content that cannot be scraped with traditional methods. To scrape these websites, we can use a web driver like Selenium that allows us to interact with the website as if we were a human user. Selenium can automate tasks like clicking buttons, scrolling, and filling out forms.

- Using Regular Expressions to Extract Data Regular expressions are a powerful tool for searching and manipulating text. In web scraping, regular expressions can be used to extract data from text that does not have a well-defined structure, such as unstructured HTML code. Python’s built-in re module makes it easy to use regular expressions for data extraction.

- Handling Cookies and Sessions Many websites use cookies and sessions to track user activity and preferences. When scraping these websites, it is important to handle cookies and sessions correctly to avoid being detected as a bot. Python’s Requests library allows us to handle cookies and sessions easily.

- Scraping Websites with Login Pages Some websites require users to log in before they can access certain content. To scrape these websites, we need to be able to authenticate ourselves using our login credentials. Python’s Requests library can be used to send POST requests with our login credentials to the website’s login page, and then use the resulting session cookie to scrape the protected pages.

By mastering these advanced web scraping techniques with Python, you will be able to scrape even the most complex websites and extract the data you need for your projects.

Best Practices for Web Scraping with Python

Web scraping is a powerful technique that can extract a vast amount of data from websites. However, it is important to follow some best practices to ensure that you are scraping websites in a responsible and ethical manner, while avoiding potential legal issues and website blocks. In this section, we will discuss some best practices for web scraping with Python.

- Understanding web scraping ethics and legality Before you start scraping a website, it is essential to understand the ethical and legal implications of web scraping. Some websites have specific policies and guidelines for web scraping, while others prohibit it altogether. Therefore, it is necessary to check a website’s terms of service or robots.txt file to ensure that you are not violating any rules.

- Avoiding getting blocked by websites Websites use various techniques to detect and block web scrapers. Therefore, it is crucial to follow some practices to avoid getting blocked by websites. Some common techniques include setting user-agent headers, rotating IP addresses, and avoiding scraping at high frequency.

- Handling errors and exceptions Web scraping can be unpredictable, and you may encounter various errors and exceptions while scraping websites. Therefore, it is essential to handle these errors and exceptions effectively. You can use try-except blocks to handle exceptions and log the errors to diagnose the issue quickly.

- Tips for writing efficient and maintainable code Writing efficient and maintainable code is critical for web scraping. Some tips for achieving this include writing reusable code, optimizing your code for speed and memory usage, and using coding best practices.

By following these best practices, you can ensure that you are scraping websites in a responsible and ethical manner while avoiding potential legal issues and website blocks.

Examples of Web Scraping with Python

In this section, we will look at some practical examples of web scraping with Python using different libraries and techniques.

- Scraping product information from an e-commerce website

- We will use the BeautifulSoup library to scrape product information from an e-commerce website. We will extract the product name, description, price, and image URL.

- Extracting data from social media platforms

- We will use the Twitter API and the Tweepy library to extract tweets from a specific user or hashtag. We will also use the Reddit API and the PRAW library to extract posts from a subreddit.

- Scraping weather data from a website

- We will use the Requests library to scrape weather data from a website. We will extract the temperature, humidity, wind speed, and other relevant information.

These examples are just a few of the many ways you can use Python for web scraping. By using the libraries and techniques discussed in the previous sections, you can create your own web scraping projects to extract data from websites and automate your data collection process. However, it is important to keep in mind the ethical and legal implications of web scraping and to use these tools responsibly.

Conclusion

In this article, we have discussed the basics of web scraping with Python and explored some of the most powerful and effective techniques for scraping data from websites. As we have seen, Python is an excellent language for web scraping, thanks to its rich set of libraries, ease of use, and flexibility.

Web scraping with Python can be an incredibly valuable tool for businesses and individuals alike. Whether you need to gather data for market research, stay on top of industry trends, or simply automate repetitive tasks, web scraping can help you achieve your goals more quickly and effectively.

It is important to note that web scraping must be done ethically and legally, respecting the terms of service of the websites you are scraping. In addition, it is crucial to handle errors and exceptions carefully and to write efficient and maintainable code.

In conclusion, if you are looking to get started with web scraping or are interested in taking your skills to the next level, Python is an excellent choice. With its powerful tools and growing community, Python offers endless possibilities for web scraping and data extraction. So why not give it a try and see how it can benefit you and your business?

FAQs

How to scrape data with Python?

Python provides several libraries and tools for web scraping. One of the most popular libraries for web scraping in Python is BeautifulSoup. It is a library that helps to parse and navigate HTML and XML documents. Other popular libraries for web scraping in Python include Scrapy, Requests, and Selenium. The process of web scraping involves sending an HTTP request to the website and parsing the HTML response to extract relevant data using these libraries.

What are the best Python libraries for web scraping?

There are several Python libraries available for web scraping, and the best library to use depends on the specific use case. Some of the most popular libraries for web scraping in Python are BeautifulSoup, Scrapy, Requests, Selenium, and PyQuery. BeautifulSoup is great for parsing HTML and XML, Scrapy is a powerful web crawling framework, Requests is a library for sending HTTP requests, Selenium is useful for automating web browser interactions, and PyQuery is similar to jQuery for parsing HTML.

Can Python be used for web scraping?

Yes, Python is a popular language for web scraping due to its ease of use, flexibility, and the availability of powerful libraries and tools. Python provides several libraries that make web scraping easy, such as BeautifulSoup, Scrapy, Requests, and Selenium. With Python, you can extract data from websites, parse HTML and XML documents, and even automate web browser interactions.

How does Python compare to other languages for web scraping?

Python is a popular language for web scraping due to its ease of use and the availability of powerful libraries and tools. Compared to other languages like Java and C#, Python has a much lower learning curve and requires less code to perform web scraping tasks. Python also has a large community of developers creating libraries and tools specifically for web scraping. However, other languages like Java and C# can be faster and more performant for some web scraping tasks.