Web Scraping with C# is a powerful technique that involves the automated extraction of data from websites. It involves writing code to access and collect specific data points from web pages, which can then be used for a variety of purposes, such as market research, lead generation, and price monitoring.

In this article, we will explore the basics of web scraping using C# (considering you know what web scraping is) and We’ll cover the fundamentals of web scraping, including how it works, why it’s useful, and the tools you’ll need to get started. We’ll also delve into the different techniques and best practices for web scraping with C#, as well as the potential pitfalls to avoid.

C# is an ideal language for web scraping due to its power and flexibility. It offers a range of features and libraries that make it easy to navigate and extract data from websites. Whether you’re a beginner or an experienced developer, this article will provide you with the knowledge and tools you need to start web scraping with C# and take advantage of the many benefits it has to offer. So let’s dive in and explore the world of web scraping with C#.

Setting Up C# Environment for Web Scraping

Before diving into web scraping with C#, it is essential to set up the necessary environment. This involves installing Visual Studio and integrating a C# web scraping library.

Visual Studio is an Integrated Development Environment (IDE) that provides a platform for creating, debugging, and deploying applications. It is available for free download from the Microsoft website and can be installed on Windows and macOS.

Once Visual Studio is installed, the next step is to integrate a web scraping library. C# has several popular libraries, such as HtmlAgilityPack, ScrapySharp, and AngleSharp, that can be used for web scraping. These libraries provide built-in functionality for parsing HTML and extracting data from web pages.

HtmlAgilityPack is one of the most popular libraries for web scraping with C#. It provides a simple API for navigating HTML documents and extracting data from them. ScrapySharp and AngleSharp are also powerful libraries that can be used for web scraping, and they provide additional features such as CSS selectors and JavaScript execution.

After installing Visual Studio and integrating a web scraping library, you are now ready to start web scraping with C#.

Basic Concepts of Web Scraping With C#

Web scraping is the process of extracting data from websites automatically using software or tools. With C#, web scraping becomes easy and efficient. The use of C# in web scraping allows developers to write scripts that can automate the collection of data from websites, which can then be analyzed and used for various purposes.

To get started with web scraping using C#, it is important to understand the basic concepts of web scraping. This includes understanding the structure of web pages, the types of data that can be extracted, and the tools and techniques that can be used for web scraping.

C# web scraping tools and techniques include the use of HTMLAgilityPack, which is a popular open-source library for HTML parsing and manipulation. This library allows developers to extract data from HTML pages easily and efficiently. Other techniques include regular expressions, which can be used to extract specific patterns of data from web pages.

Developers also need to understand the structure of web pages and how to navigate them. This includes understanding HTML tags, CSS selectors, and XPath expressions. These are essential tools for web scraping as they allow developers to locate specific elements on a web page and extract data from them.

Overall, understanding the basic concepts of web scraping is essential for anyone looking to use C# for web scraping. With the right tools and techniques, web scraping can become a powerful tool for data analysis and extraction.

How to Scrape Data Using C#?

Web scraping involves extracting data from websites, which can be a tedious and time-consuming process if done manually. C# provides several tools and libraries that can be used to automate the web scraping process and make it more efficient.

To scrape data using C#, you first need to identify the website or web page that you want to scrape. Once you have identified the website, you can use C# libraries like HtmlAgilityPack, ScrapySharp, and CsQuery to extract data from the website’s HTML code.

One basic technique for web scraping with C# involves sending an HTTP request to the website using the HttpClient class in C#. This will return the HTML code of the website, which can then be parsed using the HTML parsing libraries mentioned above. The parsed data can then be extracted and stored in a format of your choice.

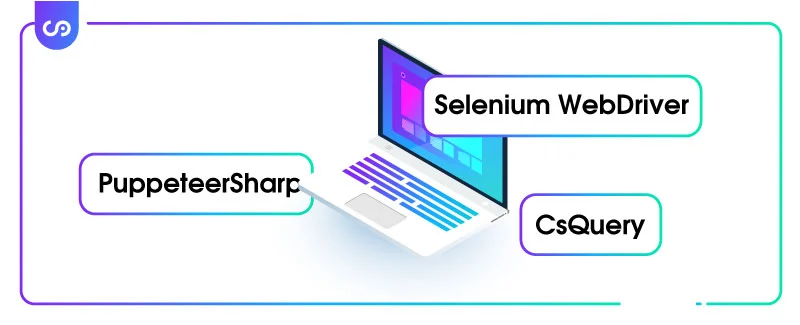

Another useful technique for web scraping with C# is to use web scraping frameworks like PuppeteerSharp and Selenium WebDriver. These frameworks allow you to automate web scraping tasks and interact with websites as if you were using a web browser. You can simulate user actions like clicking buttons and filling out forms, which can be helpful when scraping data from dynamic websites.

Overall, web scraping with C# involves a combination of HTTP requests, HTML parsing, and data extraction techniques. With the right tools and techniques, you can scrape data from websites quickly and efficiently, saving you time and effort in the long run.

Best Web Scraping Tools for C#

When it comes to web scraping with C#, there are several tools available that make the task easier and more efficient. Here, we will compare some of the most popular web scraping tools for C#.

- HtmlAgilityPack: HtmlAgilityPack is a popular open-source HTML parser that allows users to extract data from HTML files. It can be used to manipulate HTML documents, and it supports XPath and LINQ queries. It is also easy to use and well documented, making it a favorite among developers.

- ScrapySharp: ScrapySharp is a fast and easy-to-use web scraping framework for C#. It is built on top of HtmlAgilityPack and provides a simple API for web scraping. It has support for XPath and CSS selectors, and it can handle cookies and sessions.

- CsQuery: CsQuery is a jQuery-like library for C# that allows users to manipulate HTML documents. It can be used for web scraping, as well as other tasks like template rendering and data filtering. It supports jQuery selectors and has a powerful API for manipulating HTML documents.

- Selenium: Selenium is a popular open-source web testing tool that can also be used for web scraping. It allows users to automate web browsers and perform actions like clicking buttons and filling out forms. It can be used with C# through the Selenium WebDriver API.

- AngleSharp: AngleSharp is an HTML parser library for .NET that supports modern web standards. It can be used to extract data from HTML documents and provides an easy-to-use API for manipulating HTML documents. It also supports CSS selectors and can be used with C#.

Each of these tools has its own strengths and weaknesses, so it is important to choose the one that best fits your needs. For simple scraping tasks, HtmlAgilityPack or ScrapySharp may be sufficient, while more complex tasks may require the use of a full-featured browser automation tool like Selenium.

Advanced Web Scraping Techniques

Web scraping is a powerful technique that can be used to extract a wide range of data from websites. While basic web scraping techniques can be effective in many cases, there are times when more advanced techniques are required. In this section, we will explore some of the advanced web scraping techniques that can be used with C#.

Pagination is a technique that is used to scrape data from websites that have multiple pages. It involves extracting data from each page and then moving on to the next page until all of the desired data has been collected. This technique is commonly used in e-commerce websites, where products are often listed across multiple pages.

Data filtering is another advanced web scraping technique that can be used to refine the data that is collected. This involves setting specific criteria for the data that is scraped, such as only collecting data that meets certain conditions. Data filtering is particularly useful when scraping large datasets, as it can help to reduce the amount of irrelevant data that is collected.

API integration is another technique that can be used to access data from websites. APIs (Application Programming Interfaces) are interfaces that allow applications to interact with web services and retrieve data. This technique can be useful when scraping data from websites that are protected by authentication mechanisms or when scraping data from websites that do not have a public-facing interface.

When using advanced web scraping techniques, it is important to be aware of the potential ethical and legal implications of web scraping. It is essential to obtain permission before scraping any website, and to ensure that the data collected is used in an appropriate manner.

Examples of advanced web scraping techniques using C# include the use of libraries like Selenium and Puppeteer, which can be used to automate web scraping tasks and interact with websites in a more human-like manner. These libraries can be particularly useful when scraping websites that require authentication or when scraping data from dynamic web pages that use JavaScript.

Troubleshooting and Common Issues

Web scraping with C# can be a complex process that can sometimes result in unexpected errors or issues. In this section, we will discuss some of the common issues that may arise during web scraping with C# and how to troubleshoot them.

One common issue that can occur during web scraping is website blocking. Some websites may have security measures in place to prevent web scraping, which can lead to IP blocking or CAPTCHA challenges. To avoid this, it’s important to use a proxy server or VPN to mask your IP address and avoid triggering website security measures.

Another issue that may arise during web scraping is data inconsistency. Web pages may be updated frequently, resulting in changes to the structure and content of the data. To ensure data consistency, it’s important to monitor the web pages regularly and update your web scraping code accordingly.

To optimize web scraping performance, it’s important to use efficient code and minimize the number of requests to the server. This can be achieved by using caching techniques, implementing parallel processing, and reducing the size of the data to be scraped.

When encountering errors or issues during web scraping, it’s important to implement error handling techniques to ensure the smooth execution of the code. This includes handling exceptions, logging errors, and implementing retry mechanisms to avoid data loss.

In conclusion, web scraping with C# can be a powerful tool for extracting data from the web, but it requires careful planning, implementation, and monitoring. By following best practices for troubleshooting and error handling, you can ensure the successful execution of your web scraping projects.

Conclusion

Web scraping is a powerful tool for extracting data from websites and is widely used across industries for various applications. As highlighted in this article, C# is an excellent language for web scraping due to its rich libraries and frameworks. In conclusion, Web Scraping With C# is an excellent choice for businesses and developers looking to collect, analyze, and utilize data to drive insights and decision-making.

To get started with web scraping using C#, it’s essential to set up the C# environment and integrate web scraping libraries and frameworks. Basic concepts and techniques of web scraping must be mastered before moving to advanced web scraping techniques such as pagination, data filtering, and API integration.

The best web scraping tools for C# include popular libraries like HtmlAgilityPack, ScrapySharp, and CsQuery. These tools provide features and capabilities that make web scraping more accessible and efficient.

While web scraping can be a complex process, it’s crucial to troubleshoot and resolve common issues that may arise during web scraping. Best practices for error handling and data quality assurance must be followed to ensure accurate and reliable data extraction.

Overall, Web Scraping With C# is a valuable skill for businesses and developers, and it’s essential to stay up-to-date with the latest web scraping trends and technologies to maximize its benefits.

FAQs

What is web scraping with C#?

Web scraping with C# is the process of automatically extracting data from websites using C# programming language. It involves writing code to access the HTML of a web page, identifying and extracting specific pieces of data, and storing that data in a structured format for further analysis.

What are the benefits of web scraping with C#?

The benefits of web scraping with C# include automating data collection from websites, saving time and effort, obtaining data that is otherwise difficult or impossible to access, and enabling data-driven decision making.

What are the common web scraping tools used with C#?

Some common web scraping tools used with C# include HtmlAgilityPack, ScrapySharp, and CsQuery. These tools provide functionality for parsing HTML, navigating website structures, and extracting data from web pages.

How do I handle errors and maintain data quality while web scraping with C#?

To handle errors and maintain data quality while web scraping with C#, it is important to implement error handling and data validation techniques in your code. This includes verifying that the data being scraped is accurate and relevant, monitoring the performance of your web scraping code, and implementing error-handling mechanisms to handle errors that may arise during the scraping process.

Is web scraping with C# legal?

The legality of web scraping with C# (or any other programming language) depends on the specific use case and the laws of the country in which the scraping is taking place. Generally, scraping data from publicly accessible websites for personal use or non-commercial purposes is legal, while scraping data for commercial purposes or without permission may be illegal. It is important to consult legal counsel and review the terms of service of the websites being scraped before engaging in any web scraping activities.